Cognitive neuroscience has provided additional techniques for investigations of spatial and geographic thinking. However, the incorporation of neuroscientific methods still lacks the theoretical motivation necessary for the progression of geography as a discipline. Rather than reflecting a shortcoming of neuroscience, this weakness has developed from previous attempts to establish a positivist approach to behavioral geography. In this chapter, we will discuss the connection between the challenges of positivism in behavioral geography and the current drive to incorporate neuroscientific evidence. We will also provide an overview of research in geography and neuroscience. Here, we will focus specifically on large-scale spatial thinking and navigation. We will argue that research at the intersection of geography and neuroscience would benefit from an explanatory, theory-driven approach rather than a descriptive, exploratory approach. Future considerations include the extent to which geographers have the skills necessary to conduct neuroscientific studies, whether or not geographers should be equipped with these skills, and the extent to which collaboration between neuroscientists and geographers can be useful.

Read MoreEVE is a framework for the setup, implementation, and evaluation of experiments in virtual reality. The framework aims to reduce repetitive and error-prone steps that occur during experiment-setup while providing data management and evaluation capabilities. EVE aims to assist researchers who do not have specialized training in computer science. The framework is based on the popular platforms of Unity and MiddleVR. Database support, visualization tools, and scripting for R make EVE a comprehensive solution for research using VR. In this article, we illustrate the functions and flexibility of EVE in the context of an ongoing VR experiment called Neighbourhood Walk.

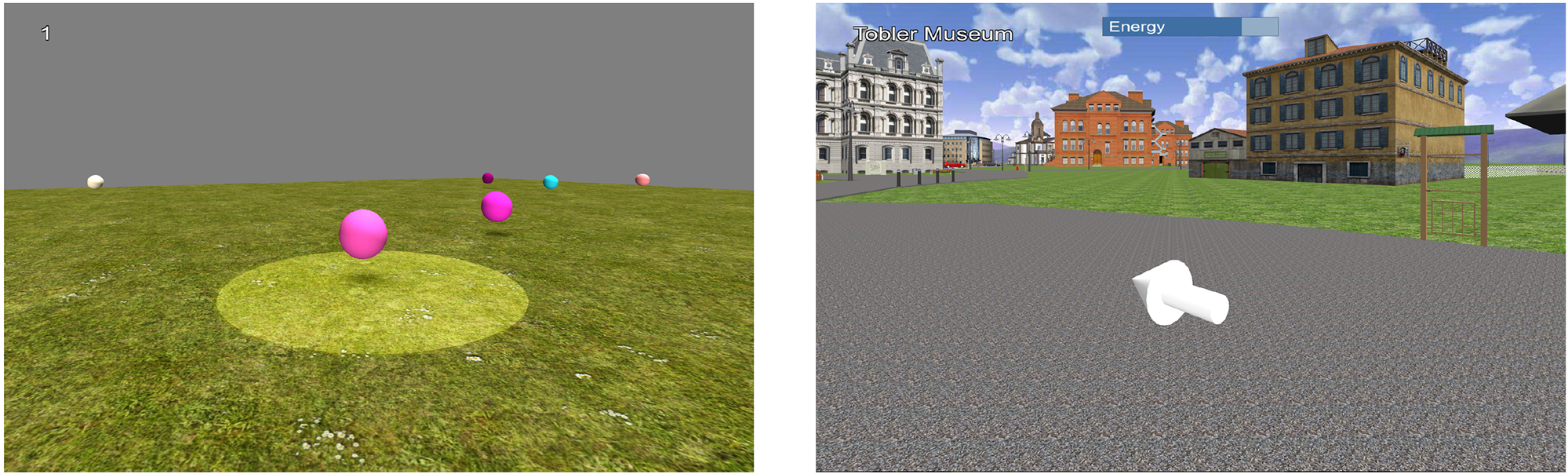

Read MorePrevious research in spatial cognition has often relied on simple spatial tasks in static environments in order to draw inferences regarding navigation performance. These tasks are typically divided into categories (e.g., egocentric or allocentric) that reflect different two-systems theories. Unfortunately, this two-systems approach has been insufficient for reliably predicting navigation performance in virtual reality (VR). In the present experiment, participants were asked to learn and navigate towards goal locations in a virtual city and then perform eight simple spatial tasks in a separate environment. These eight tasks were organised along four orthogonal dimensions (static/dynamic, perceived/remembered, egocentric/allocentric, and distance/direction). We employed confirmatory and exploratory analyses in order to assess the relationship between navigation performance and performances on these simple tasks. We provide evidence that a dynamic task (i.e., intercepting a moving object) is capable of predicting navigation performance in a familiar virtual environment better than several categories of static tasks. These results have important implications for studies on navigation in VR that tend to over-emphasise the role of spatial memory. Given that our dynamic tasks required efficient interaction with the human interface device (HID), they were more closely aligned with the perceptuomotor processes associated with locomotion than wayfinding. In the future, researchers should consider training participants on HIDs using a dynamic task prior to conducting a navigation experiment. Performances on dynamic tasks should also be assessed in order to avoid confounding skill with an HID and spatial knowledge acquisition.

Read MoreWe tell stories to save the past. Most of these stories today are experienced through reading texts, and we consequently are denied the visceral experience of the past even though we strive to recapture and animate lost worlds through our distinct senses. Virtual Plasencia is our highly realistic and interactive model of the Spanish medieval city of Plasencia. Virtual Plasencia offers dynamic new ways of storytelling via visual and auditory senses. By navigating the three dimensional city simulation, users begin to experience the sights and sounds of daily life in a medieval city, meander its cobbled streets and contemplate its principal structures and residences, and observe human interactions from different (e.g., religious, personal, communal) points of view. Inside Virtual Plasencia, users encounter people and places that cannot usually be achieved through traditional written narratives. The opportunity to observe historical events in loco represents a valuable new form of representation of the past.

Read MoreOne significant feature of urbanisation in the twenty-first century is the increase in large, complex and densely populated city quarters. Airports, shopping precincts, sports venues and cultural facilities increasingly combine with generic function buildings such as hotels, housing, businesses and offices to produce horizontal and vertical nodes in a city. The capacity of such city quarters to bring large numbers of people into proximity produces crowds of unprecedented complexity. The manner in which such crowds ‘behave’ in space by aggregating, disaggregating, flowing or stalling generate new kinds of urban experience that can be thrilling, bewildering, stressful or even threatening. In turn, this creates a set of complex challenges for architectural design and its capacity to understand human behaviour and crowd dynamics.

Read MoreSignage systems are critical for communicating environmental information. Signage that is visible and properly located can assist individuals in making efficient navigation decisions during wayfinding. Drawing upon concepts from information theory, we propose a framework to quantify the wayfinding information available in a virtual environment. Towards this end, we calculate and visualize the uncertainty in the information available to agents for individual signs. In addition, we expand on the influence of new signs on overall information (e.g., joint entropy, conditional entropy, mutual Information). The proposed framework can serve as the backbone for an evaluation tool to help architects during different stages of the design process by analyzing the efficiency of the signage system.

Read MoreUsing novel virtual cities, we investigated the influence of verbal and visual strategies on the encoding of navigation-relevant information in a large-scale virtual environment. In 2 experiments, participants watched videos of routes through 4 virtual cities and were subsequently tested on their memory for observed landmarks and their ability to make judgments regarding the relative directions of the different landmarks along the route. In the first experiment, self-report questionnaires measuring visual and verbal cognitive styles were administered to examine correlations between cognitive styles, landmark recognition, and judgments of relative direction. Results demonstrate a tradeoff in which the verbal cognitive style is more beneficial for recognizing individual landmarks than for judging relative directions between them, whereas the visual cognitive style is more beneficial for judging relative directions than for landmark recognition. In a second experiment, we manipulated the use of verbal and visual strategies by varying task instructions given to separate groups of participants. Results confirm that a verbal strategy benefits landmark memory, whereas a visual strategy benefits judgments of relative direction. The manipulation of strategy by altering task instructions appears to trump individual differences in cognitive style. Taken together, we find that processing different details during route encoding, whether due to individual proclivities (Experiment 1) or task instructions (Experiment 2), results in benefits for different components of navigation-relevant information. These findings also highlight the value of considering multiple sources of individual differences as part of spatial cognition investigations.

Read MoreSpatial navigation in the absence of vision has been investigated from a variety of perspectives and disciplines. These different approaches have progressed our understanding of spatial knowledge acquisition by blind individuals, including their abilities, strategies, and corresponding mental representations. In this review, we propose a framework for investigating differences in spatial knowledge acquisition by blind and sighted people consisting of three longitudinal models (i.e., convergent, cumulative, and persistent). Recent advances in neuroscience and technological devices have provided novel insights into the different neural mechanisms underlying spatial navigation by blind and sighted people and the potential for functional reorganization. Despite these advances, there is still a lack of consensus regarding the extent to which locomotion and wayfinding depend on amodal spatial representations. This challenge largely stems from methodological limitations such as heterogeneity in the blind population and terminological ambiguity related to the concept of cognitive maps. Coupled with an over-reliance on potential technological solutions, the field has diffused into theoretical and applied branches that do not always communicate. Here, we review research on navigation by congenitally blind individuals with an emphasis on behavioral and neuroscientific evidence, as well as the potential of technological assistance. Throughout the article, we emphasize the need to disentangle strategy choice and performance when discussing the navigation abilities of the blind population.

Read MoreSpatial navigation in the absence of vision has been investigated from a variety of perspectives and disciplines. These different approaches have progressed our understanding of spatial knowledge acquisition by blind individuals, including their abilities, strategies, and corresponding mental representations. In this review, we propose a framework for investigating differences in spatial knowledge acquisition by blind and sighted people consisting of three longitudinal models (i.e., convergent, cumulative, and persistent). Recent advances in neuroscience and technological devices have provided novel insights into the different neural mechanisms underlying spatial navigation by blind and sighted people and the potential for functional reorganization. Despite these advances, there is still a lack of consensus regarding the extent to which locomotion and wayfinding depend on amodal spatial representations. This challenge largely stems from methodological limitations such as heterogeneity in the blind population and terminological ambiguity related to the concept of cognitive maps. Coupled with an over-reliance on potential technological solutions, the field has diffused into theoretical and applied branches that do not always communicate. Here, we review research on navigation by congenitally blind individuals with an emphasis on behavioral and neuroscientific evidence, as well as the potential of technological assistance. Throughout the article, we emphasize the need to disentangle strategy choice and performance when discussing the navigation abilities of the blind population.

Read MoreWe present the results of a study that investigated the interaction of strategy and scale on search quality and efficiency for vista-scale spaces. The experiment was designed such that sighted participants were required to locate “invisible” objects whose locations were marked only with audio cues, thus enabling sight to be used for search coordination, but not for object detection. Participants were assigned to one of three conditions: a small indoor space (~20 m2), a medium-sized outdoor space (~250 m2), or a large outdoor space (~1000 m2), and the entire search for each participant was recorded either by a laser tracking system (indoor) or by GPS (outdoor). Results revealed a clear relationship between the size of space and search strategy. Individuals were likely to use ad-hoc methods in smaller spaces, but they were much more likely to search large spaces in a systematic fashion. In the smallest space, 21.5% of individuals used a systematic gridline search, but the rate increased to 56.2% for the medium-sized space, and 66.7% for the large-sized space. Similarly, individuals were much more likely to revisit previously found locations in small spaces, but avoided doing so in large spaces, instead devoting proportionally more time to search. Our results suggest that even within vista-scale spaces, perceived transport costs increase at a decreasing rate with distance, resulting in a distinct shift in exploration strategy type.

Read More